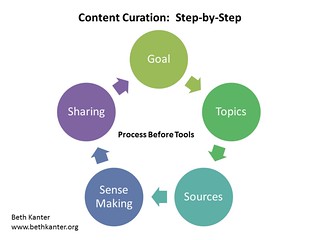

Content curation is the collection and sharing of interesting, informative, or entertaining content from within a particular niche. It’s a great way of establishing a reputation as an authority and gathering followers with a particular set of interests.

For businesses, content curation helps demonstrate expertise, is less expensive than content creation, and perhaps most importantly, contributes towards cultivating relationships with potential clients, customers, vendors, and partners.

In an age where social media and content marketing are blossoming, the sheer amount of content out there — of vastly variable quality — makes finding just the right material to share a potentially time-consuming endeavor. Continue reading

Google have introduced a

Google have introduced a